Agenda

Workshop runs from 12:00 PM to 5:00 PM

- Lunch at 14:30 PM

Multiple sections - theory + exercises

- Introduction to Containers

- Docker and Kubernetes

- Istio Service Mesh

- Traffic Routing

- Resiliency

- Security

Introduction

- I am Peter (@pjausovec)

- Software Engineer at Oracle

- Working on "cloud-native" stuff

- Books:

- Cloud Native: Using Containers, Functions, and Data to Build Next-Gen Apps

- SharePoint Development

- VSTO For Dummies

- Courses:

- Kubernetes Course (https://startkubernetes.com)

- Istio Service Mesh Course (https://learnistio.com)

By helpameout - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=20337780

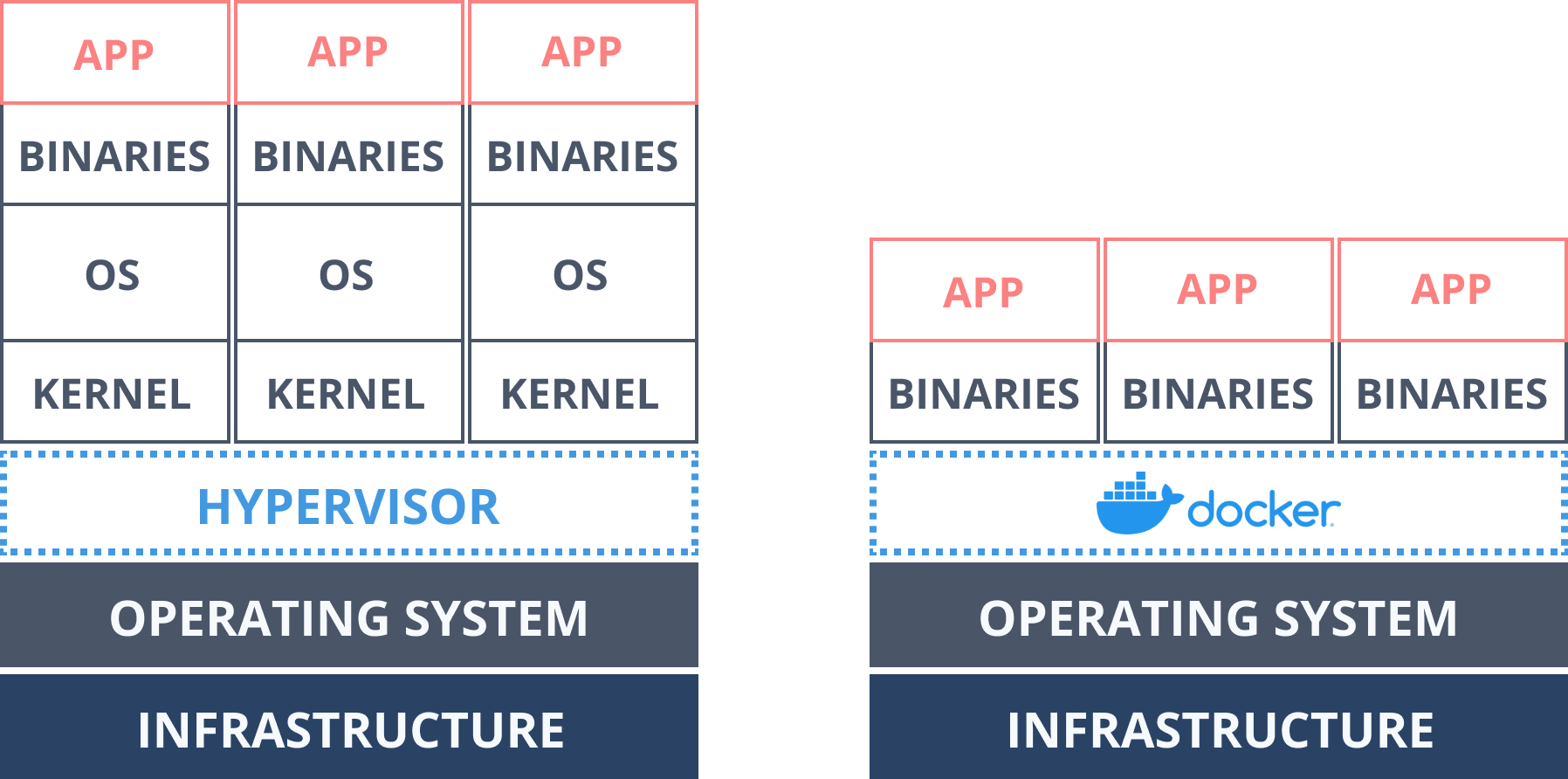

Virtualization

- 2006: VMWare Server

- Run multiple OS on the same host

- Expensive: multiple kernels, OS ...

Docker

- First public release in 2013

- Containers existed in Linux for >10 years

- Slice the OS to securely run multiple applications

- Namespaces, cGroups

What is Docker?

Docker Engine (daemon) + CLI

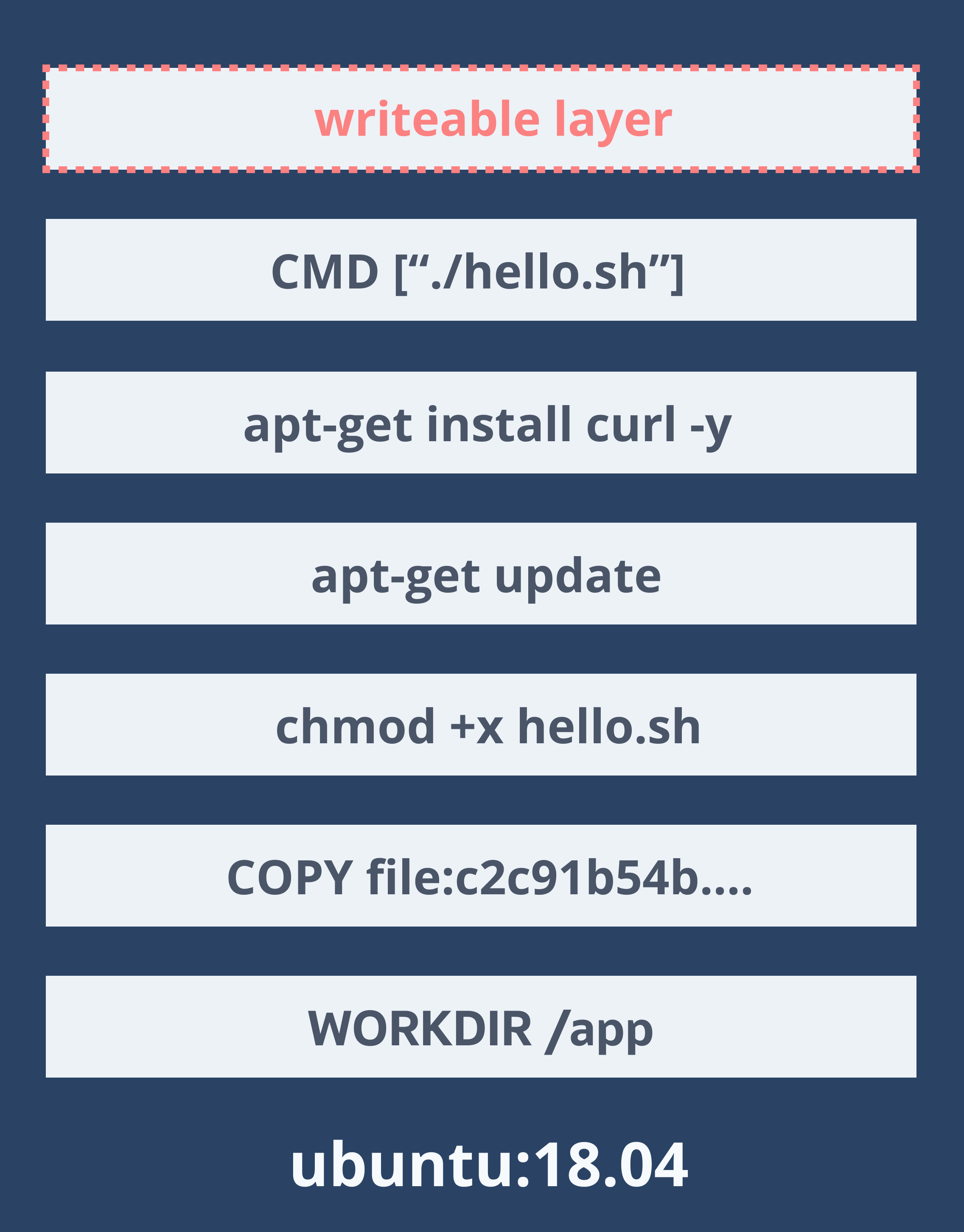

Dockerfile

FROM ubuntu:18.04WORKDIR /appCOPY hello.sh /appRUN chmod +x hello.shRUN apt-get updateRUN apt-get install curl -yCMD ["./hello.sh"]Docker image

- Collection of layers from

Dockerfile(one layer per command) - Layers are stacked on top of each other

- Each layer is a delta from the layer before it

- All layers are read-only

Docker image

Image names

- Image = repository + image name + tagmycompany/hello-world:1.0.1

- All images get a default tag called latest

- Tag = version or variant of an image

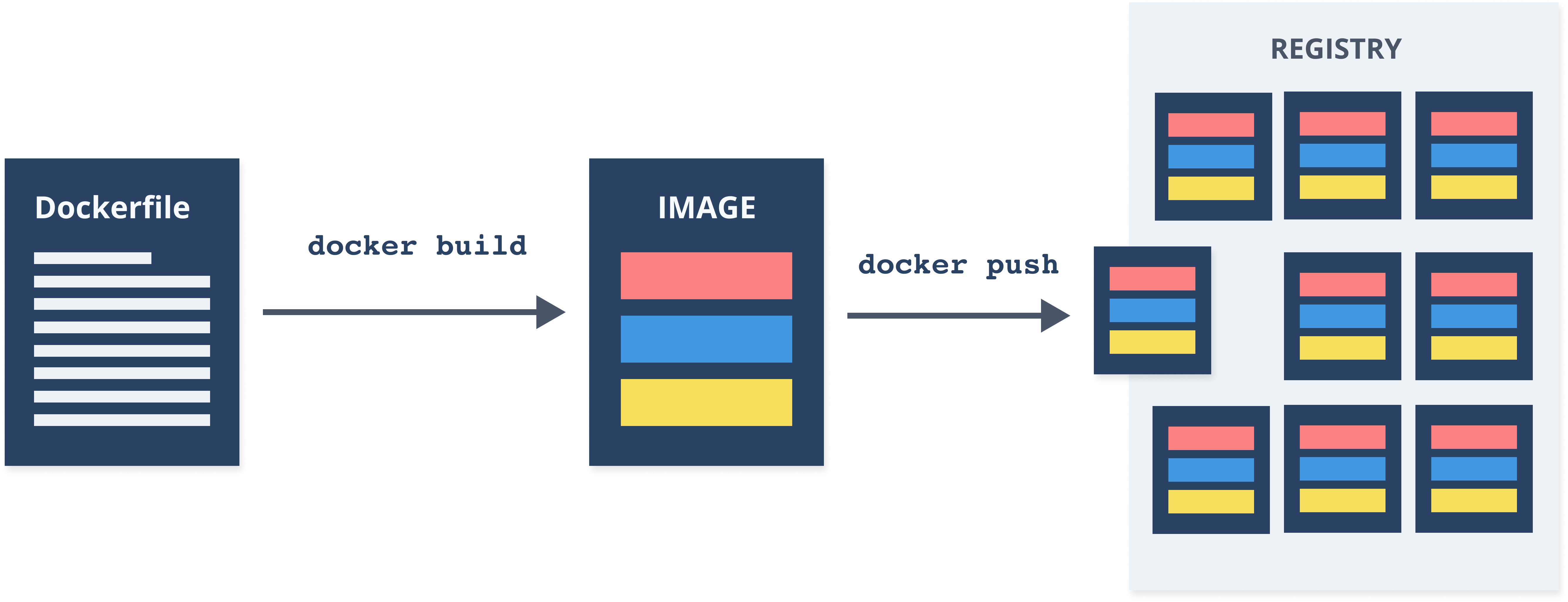

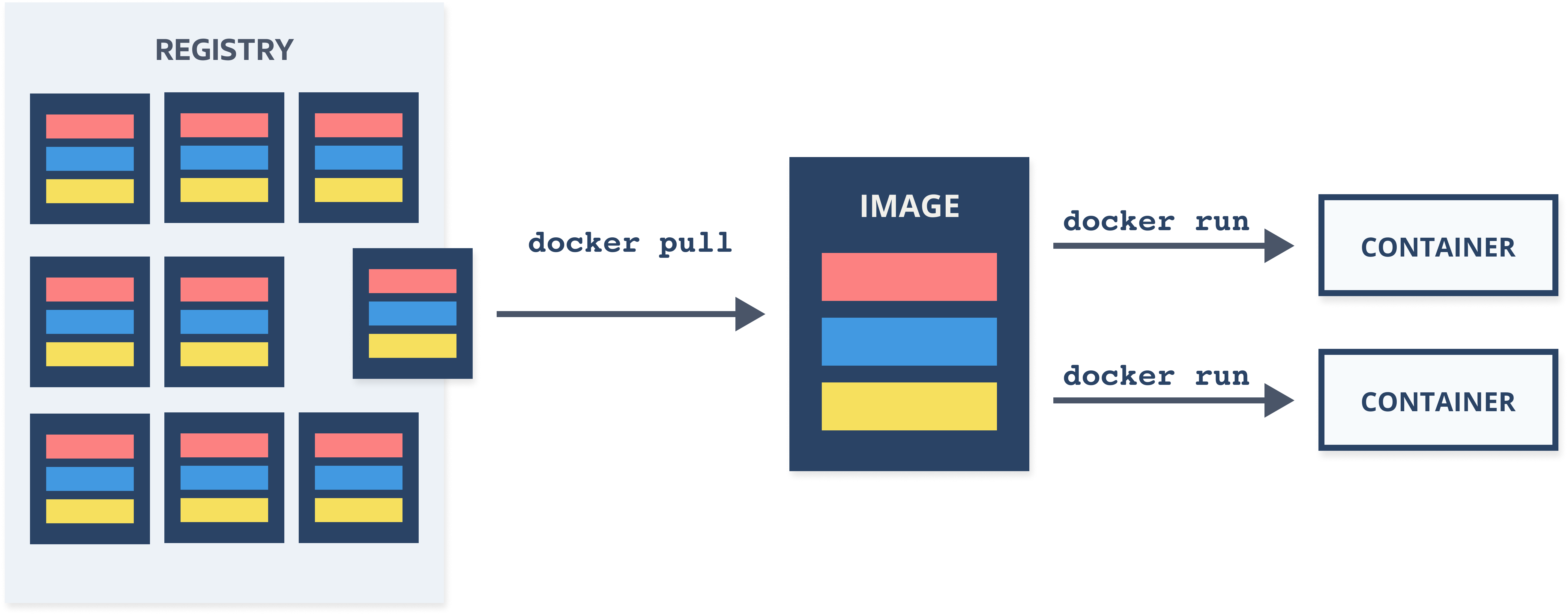

Docker Registry

- Place to store your Docker images

- Public and private repositories

- Docker Hub (https://hub.docker.com)

- Every cloud provider has its own

- You can also store images locally, on your Docker host

![]()

Container Orchestration

- Provision and deploy containers onto nodes

- Resource management/scheduling containers

- Health monitoring

- Scaling

- Connect to networking

- Internal load balancing

Kubernetes Overview

- Most popular choice for cluster management and scheduling container-centric workloads

- Open source project for running and managing containers

- K8S = KuberneteS

Definitions

Portable, extensible, open-source platform for managing containerized workloads and services

Container-orchestration system for automating application deployment, scaling, and management

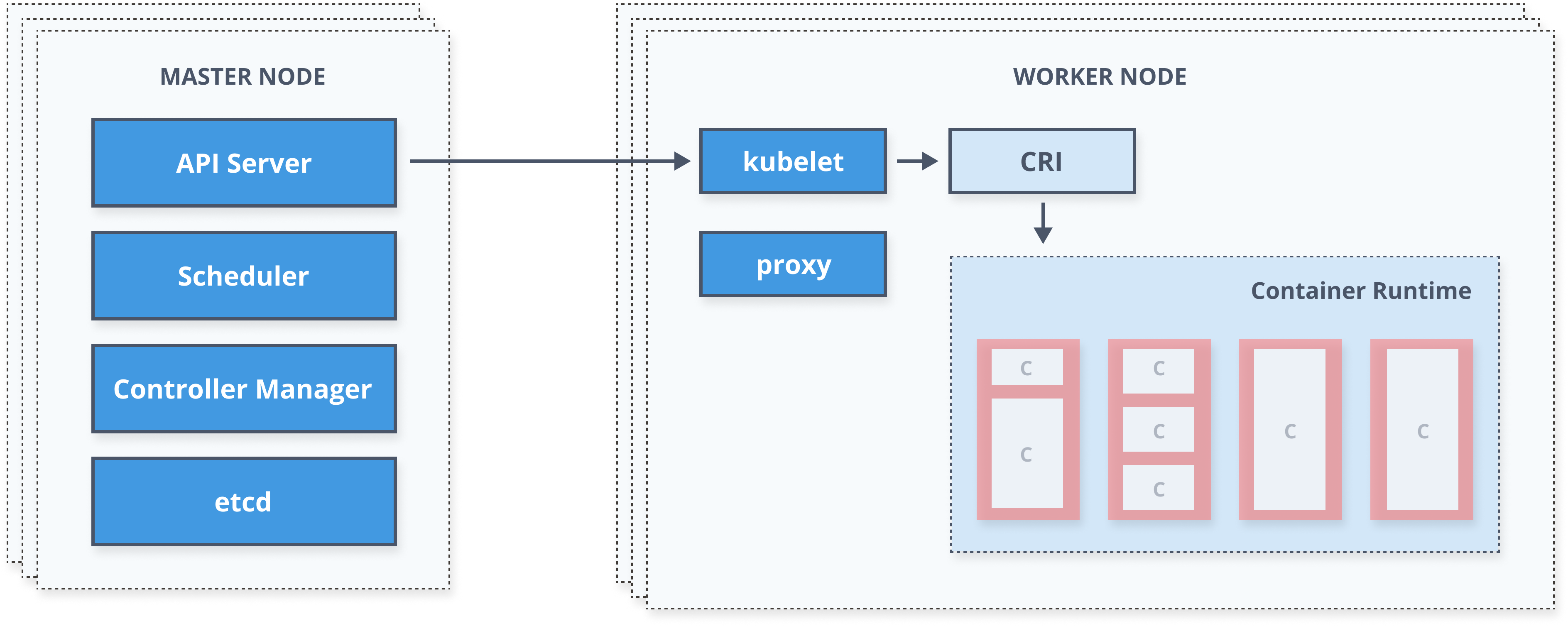

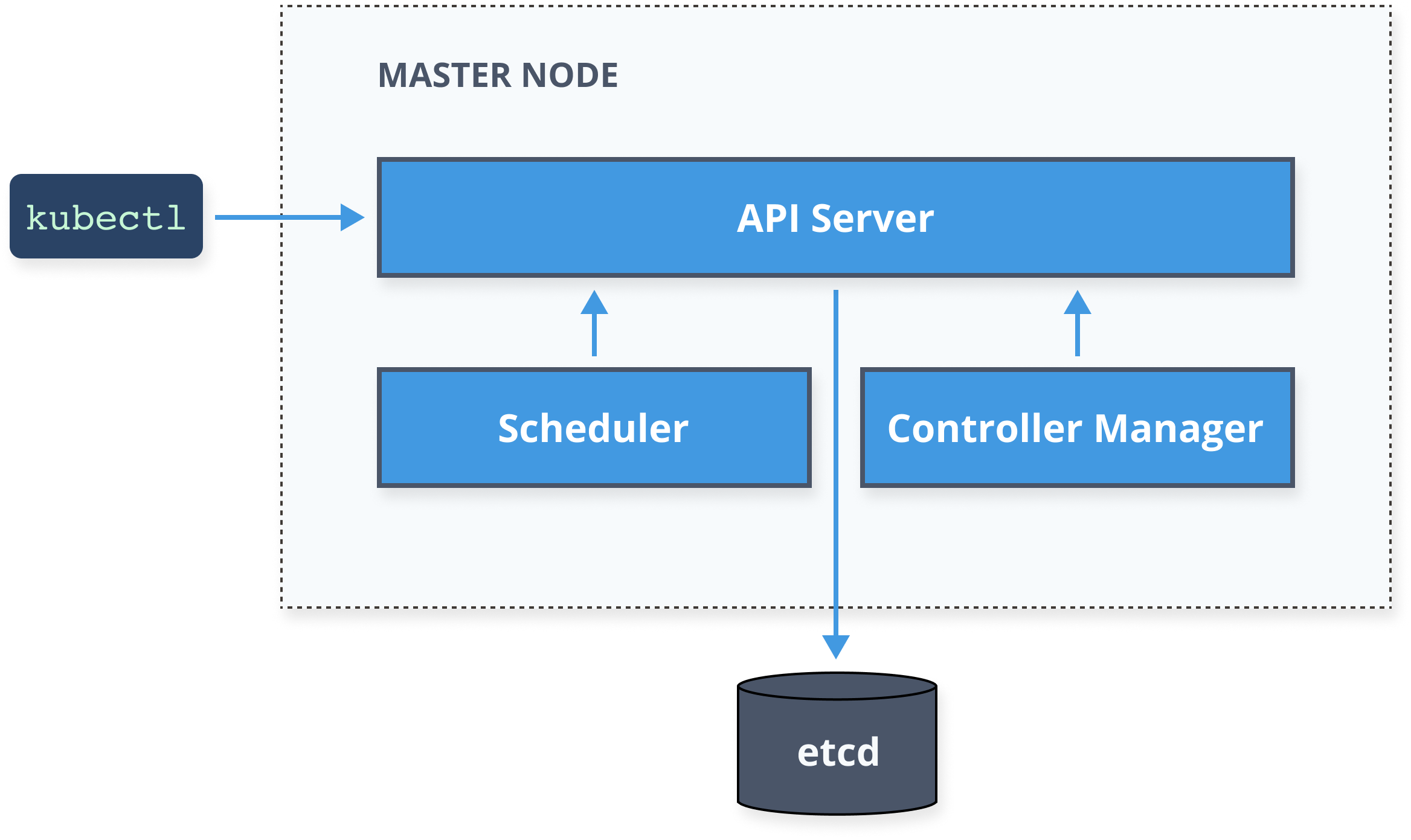

Kubernetes Architecture

Kubernetes Resources

Multiple resources defined in the Kubernetes API

- namespaces

- pods

- services

- secrets

- ...

Custom resources as well!

- CRD (Custom Resource Definition)

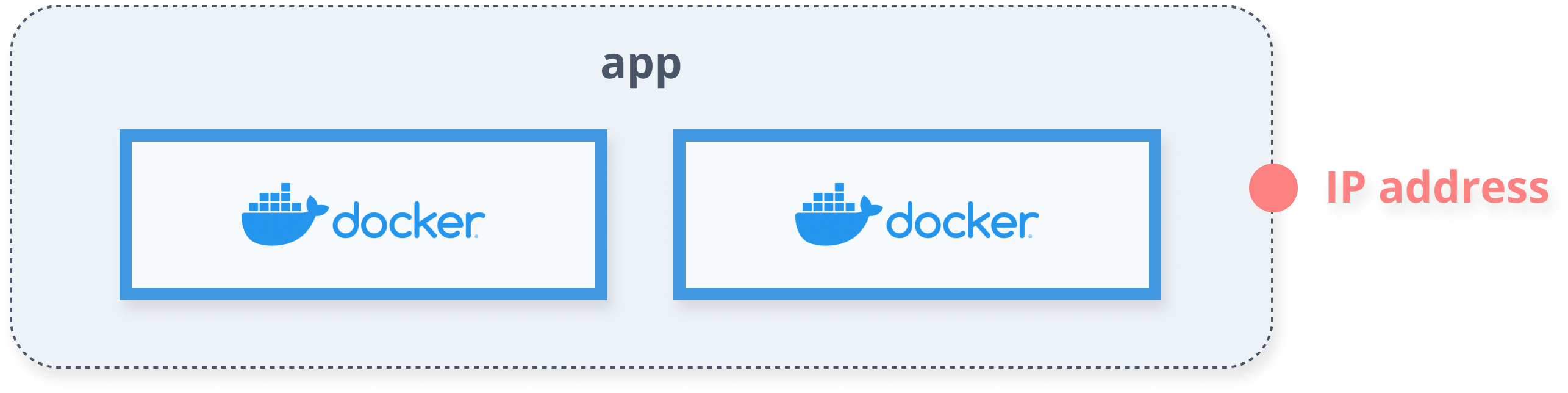

Pods

- Smallest deployable/manageable/scalable unit

- Logic grouping of containers

- All running on the same node

- Share namespace, network, and volumes

- Has a unique IP

- Controlled by a ReplicaSet

Pods

apiVersion: v1kind: Podmetadata: name: myapp-pod labels: app: myappspec: containers: - name: myapp-container image: busybox command: ['sh', '-c', 'echo Hello Minsk! && sleep 3600']ReplicaSet

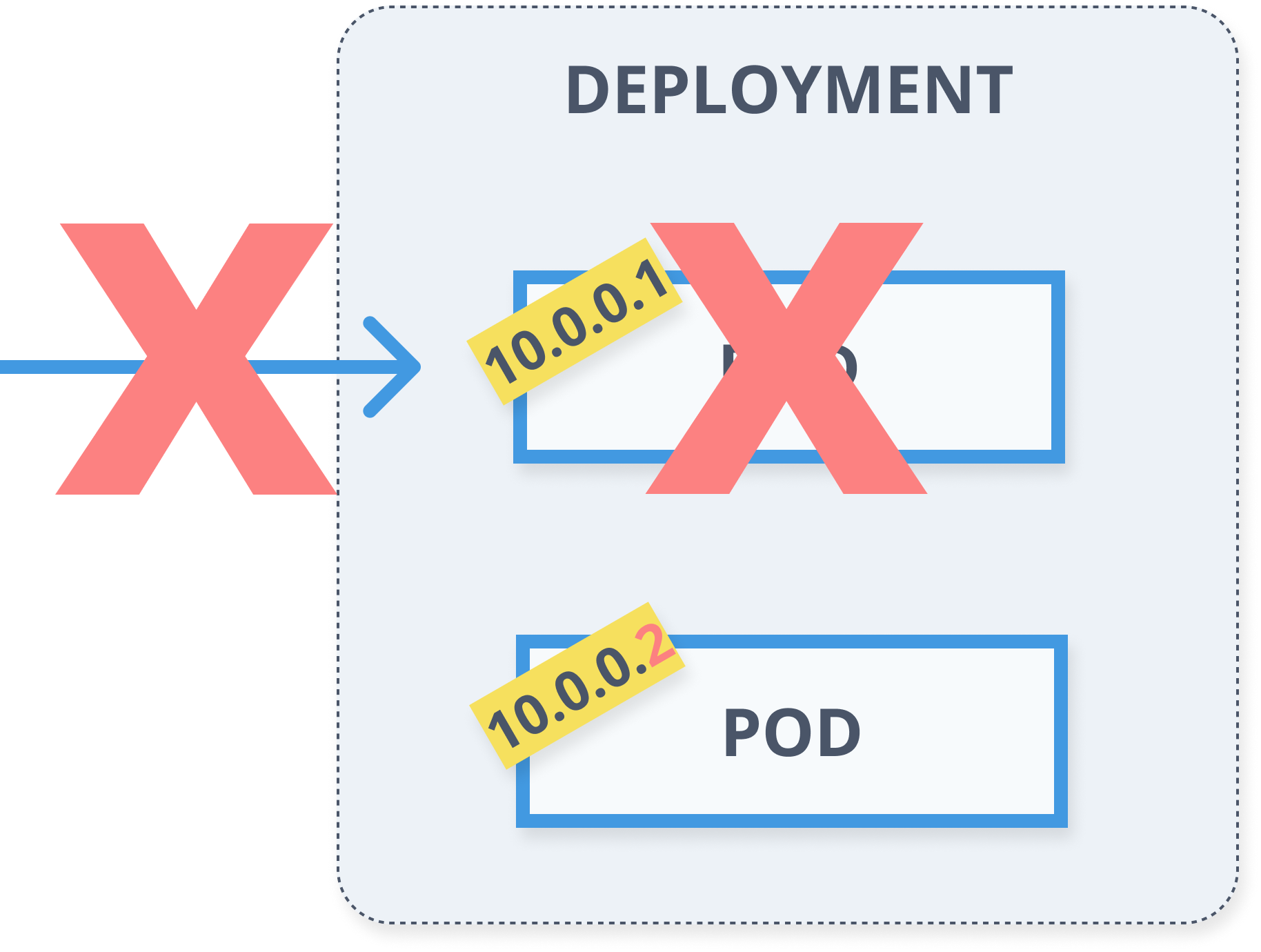

- Ensures specified number of pod replicas is running

- Creates and deletes pods as needed

- Selector + Pod template + Replica count

apiVersion: apps/v1kind: ReplicaSetmetadata: name: myapp labels: app: myappspec: replicas: 5 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: myapp-container image: busybox command: ['sh', '-c', 'echo Hello Minsk! && sleep 3600']Demo

Pods and ReplicaSets

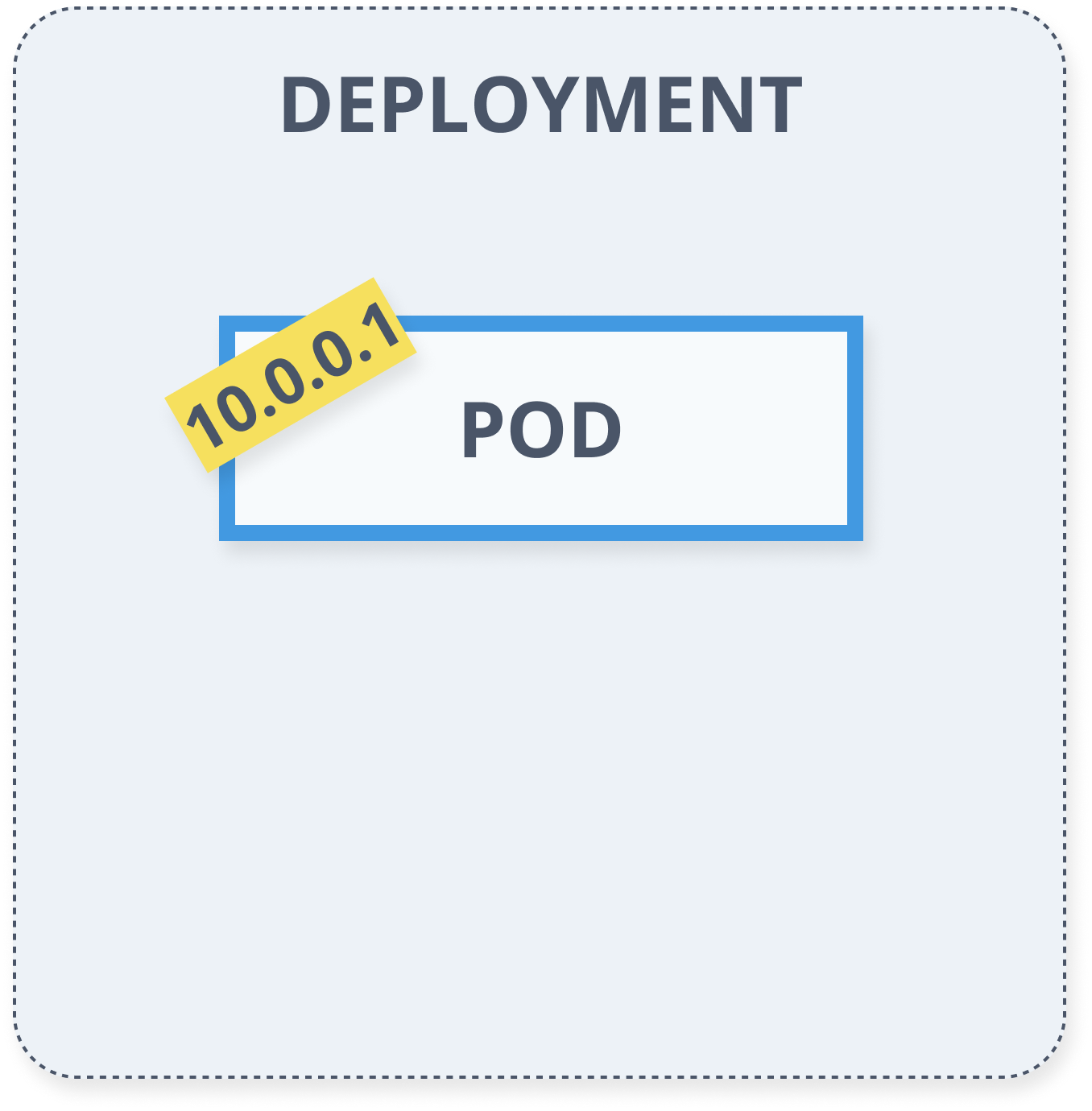

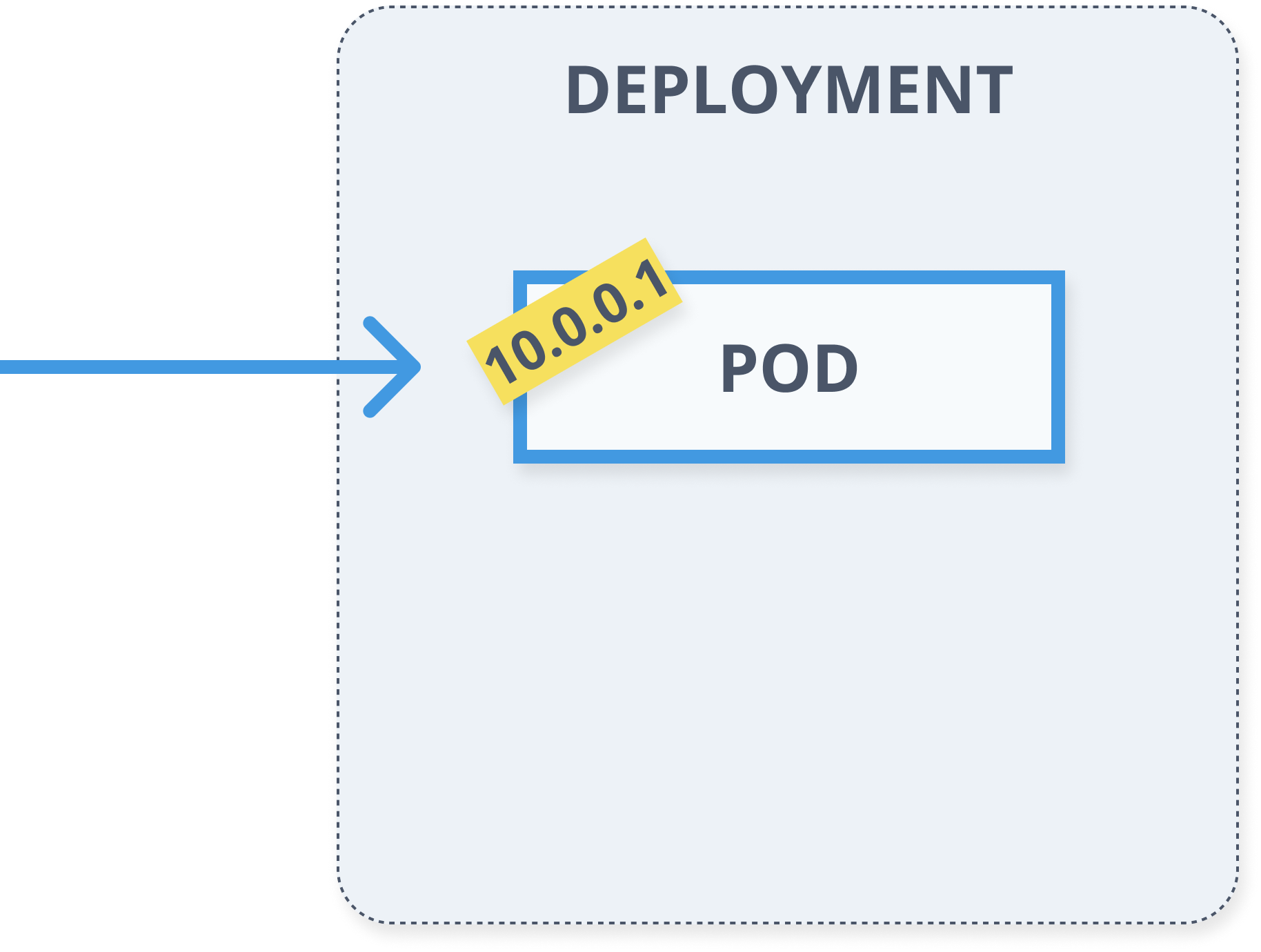

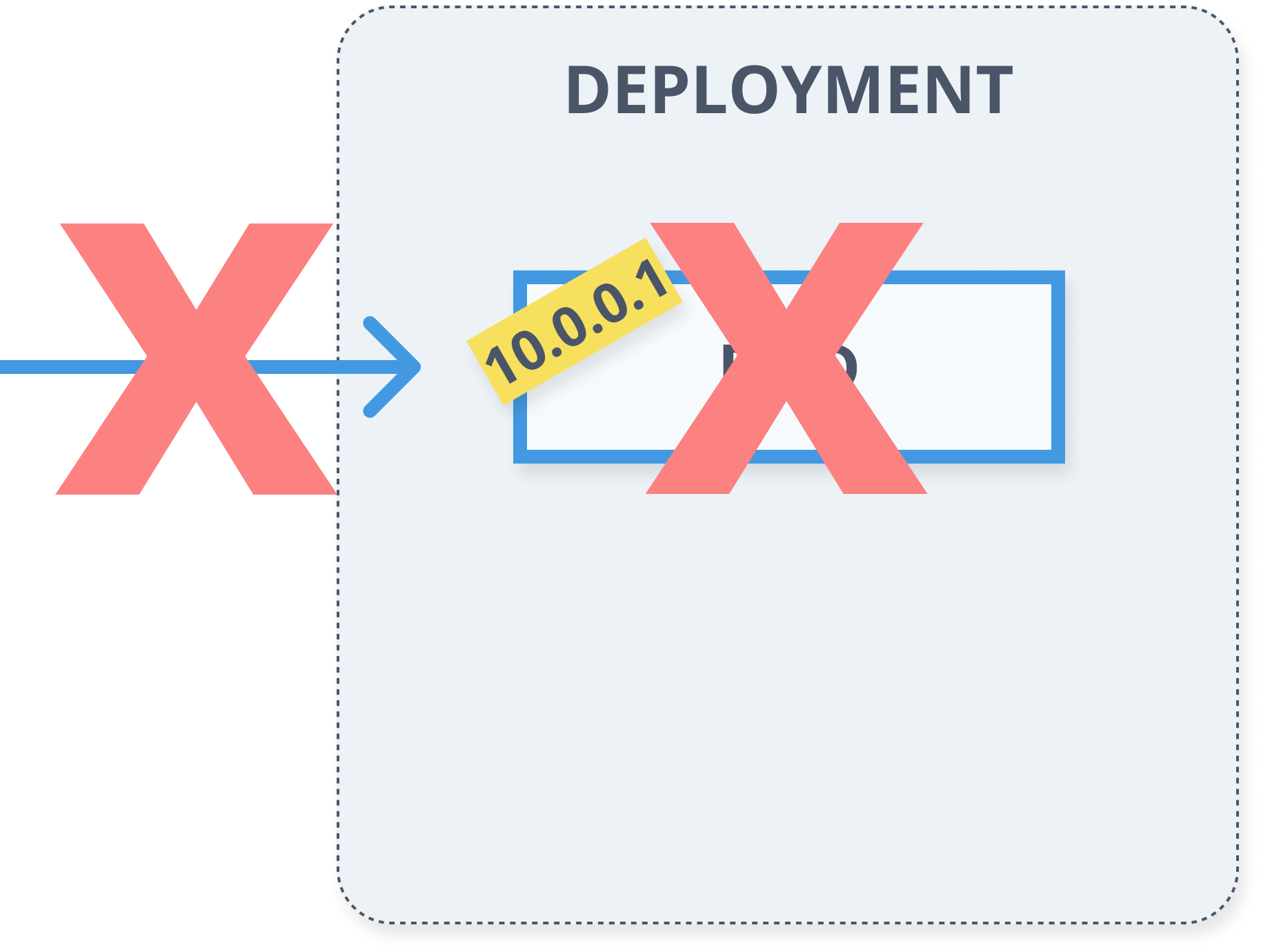

Deployments

- Describes desired state

- Manages updates

- Controlled roll-out from actual state to the desired state

apiVersion: apps/v1kind: Deploymentmetadata: name: myapp labels: app: myappspec: replicas: 5 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: myapp-container image: busybox command: ['sh', '-c', 'echo Hello Minsk! && sleep 3600']

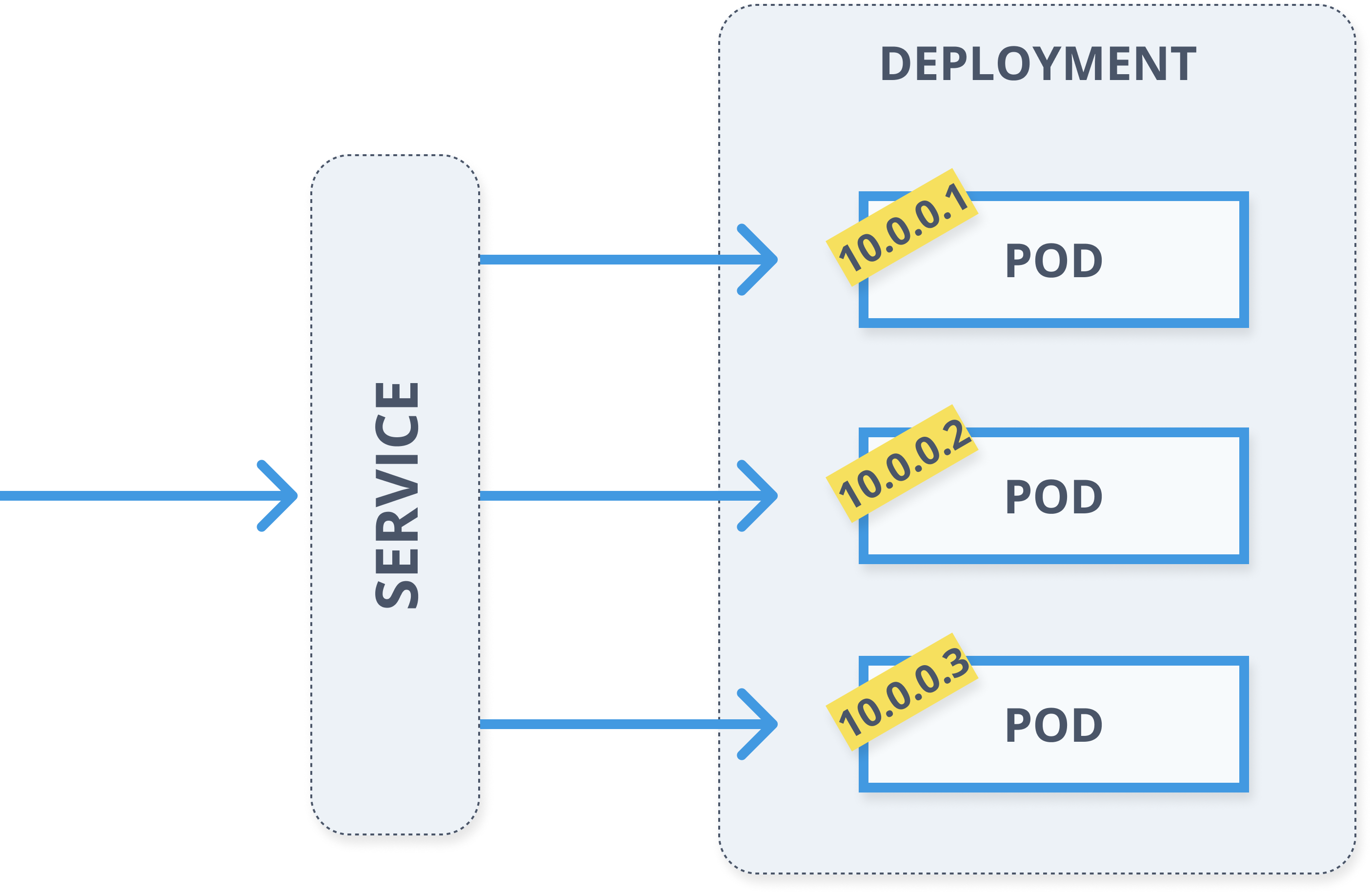

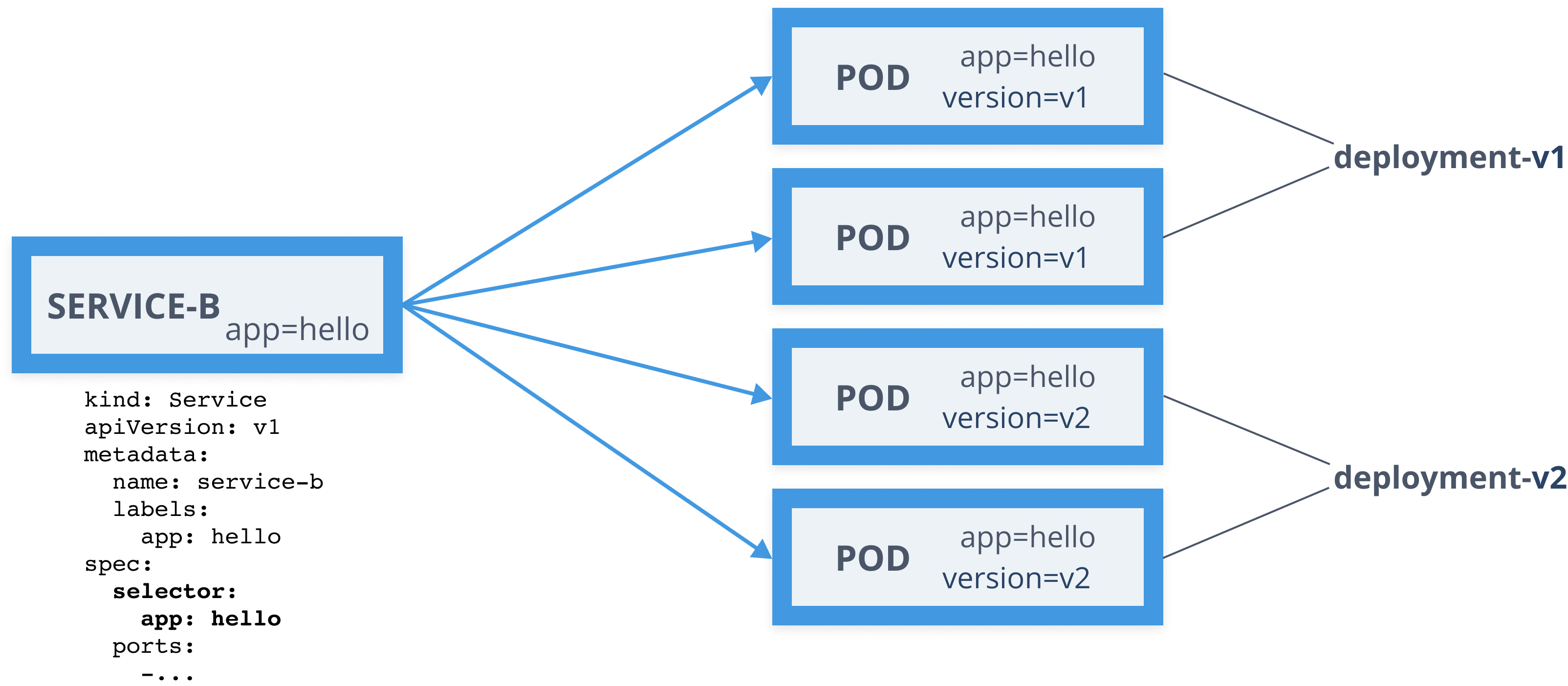

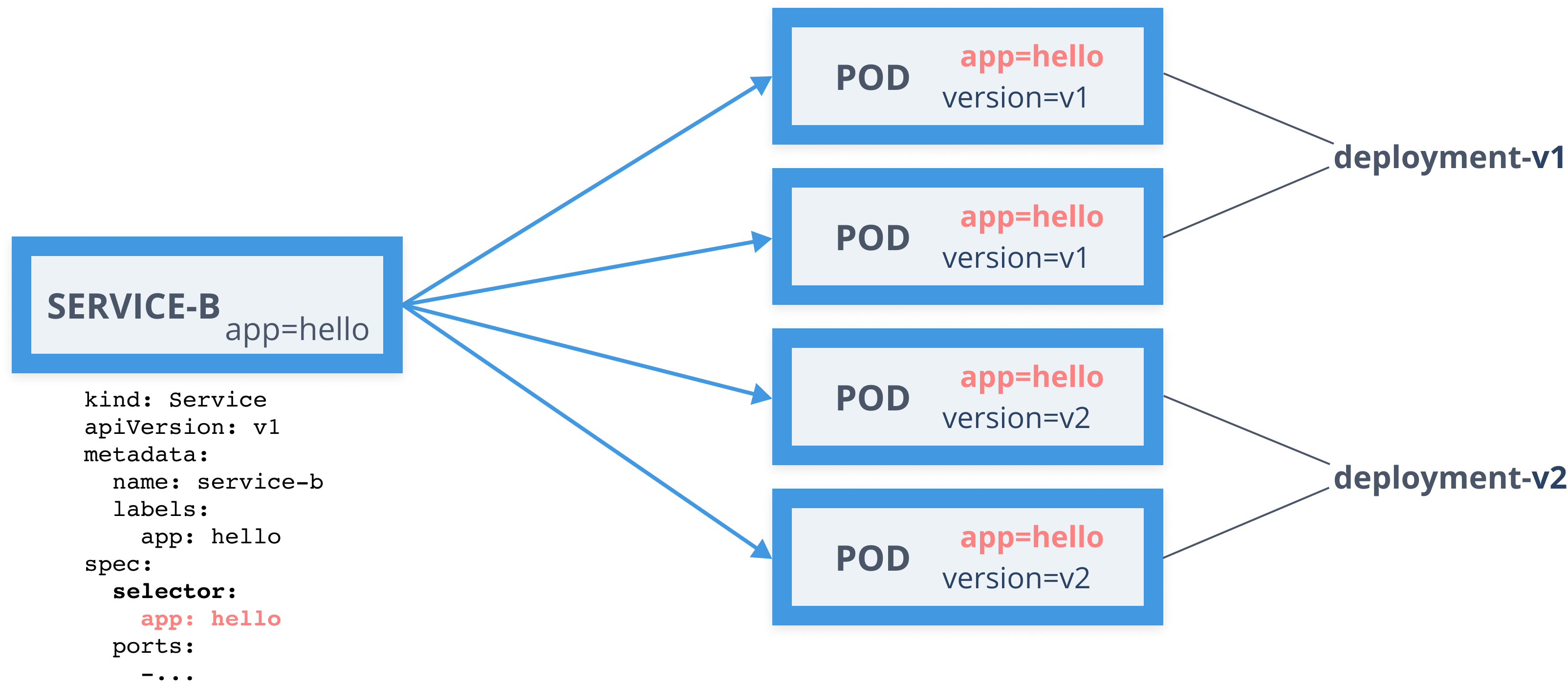

Services

- Define a logical set of pods

- Pods are determined using labels (selector)

- Reliable, fixed IP address

- Automatic DNS entries

- E.g.

hello-web.default

- E.g.

kind: ServiceapiVersion: v1metadata: name: myapp labels: app: myappspec: selector: app: myapp ports: - port: 80 name: http targetPort: 3000Services

ClusterIP

- Service is exposed on a cluster-internal iP (default)

NodePort

- Uses the same static port on each cluster node to expose the service

LoadBalancer

- Uses cloud providers' load balancer to expose the service

ExternalName

- Maps the service to a DNS name

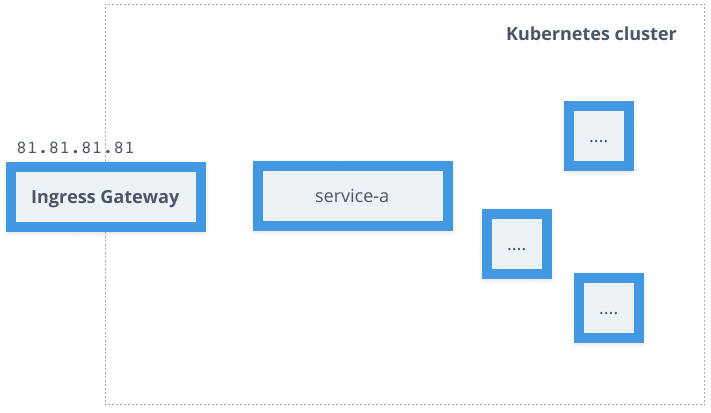

Ingress

- Exposes HTTP/HTTPS routes from outside the cluster to services within the cluster

- Ingress controller uses a load balancer to handle the traffic (based on the ingress rules)

- Fanout and name based virtual hosting support:

- blog.example.com -> blog-service.default

- chat.example.com -> chat-service.default

apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata: name: ingress-example annotations: nginx.ingress.kubernetes.io/rewrite-target: /spec: rules: - host: blog.example.com http: paths: - path: / backend: serviceName: blog-service servicePort: 3000 - path: /api backend: serviceName: blog-api servicePort: 8080Config Maps

- Stores configuration values (key-value pairs)

- Values consumed in pods as:

- environment variables

- files

- Helps separating app code from configuration

- Needs to exist before they are consumed by pods (unless marked as optional)

- Need to be in the same namespace as pods

apiVersion: v1 kind: ConfigMapmetadata: name: hello-kube-config namespace: default data: count: 1 hello: worldSecrets

- For storing and managing small amount of sensitive data (passwords, tokens, keys)

- Referenced as files in a volume, mounted from a secret

- Base64 encoded

- Types: generic, Docker registry, TLS

Namespaces

- Provides unique scope for resources

my-namespace.my-serviceanother-namespace.my-service

- (Most) Kubernetes resources live inside a namespace

- Can't be nested

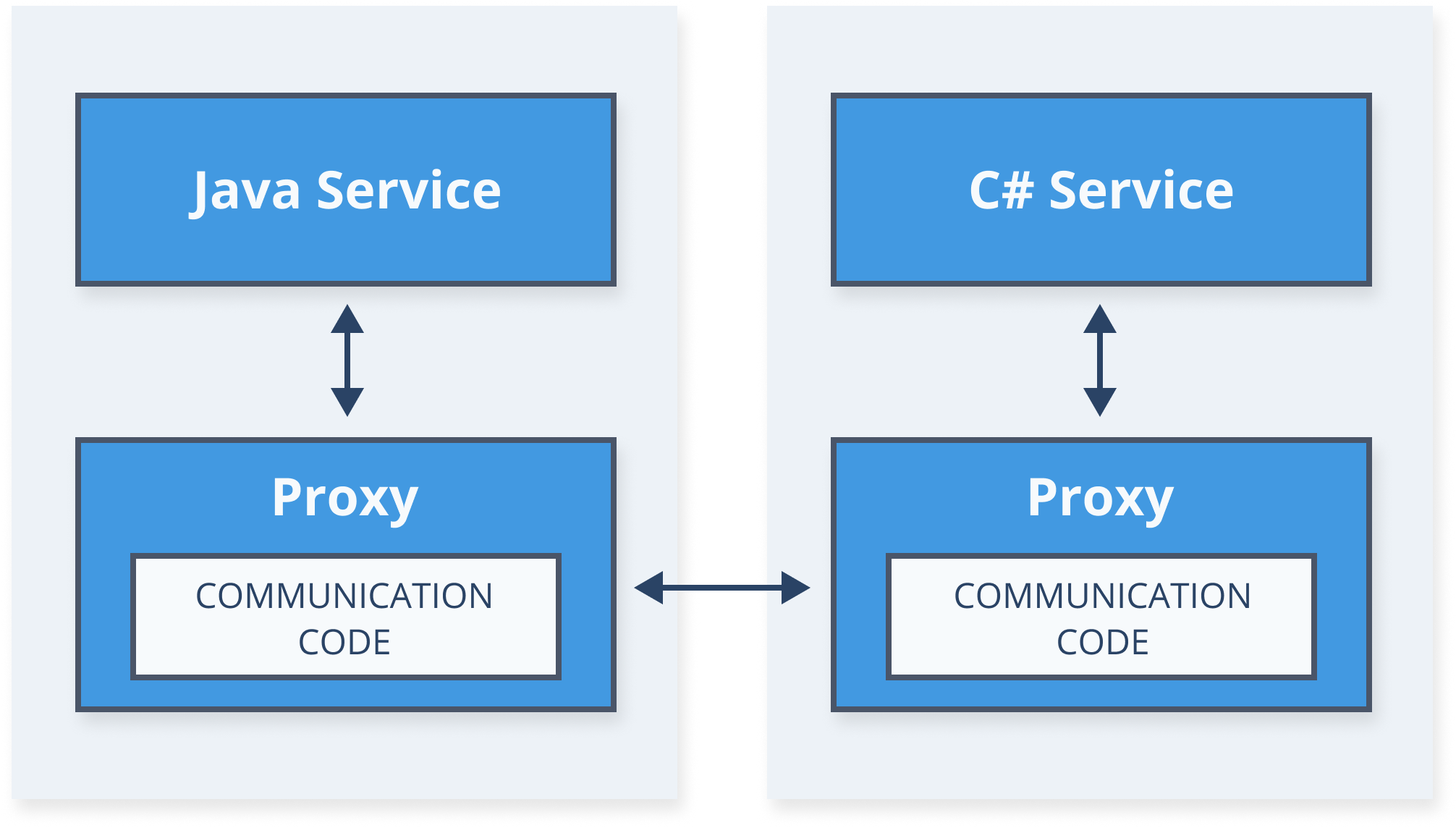

Service Mesh

Dedicated infrastructure layer to connect, manage, and secure workloads by managing the communication between them

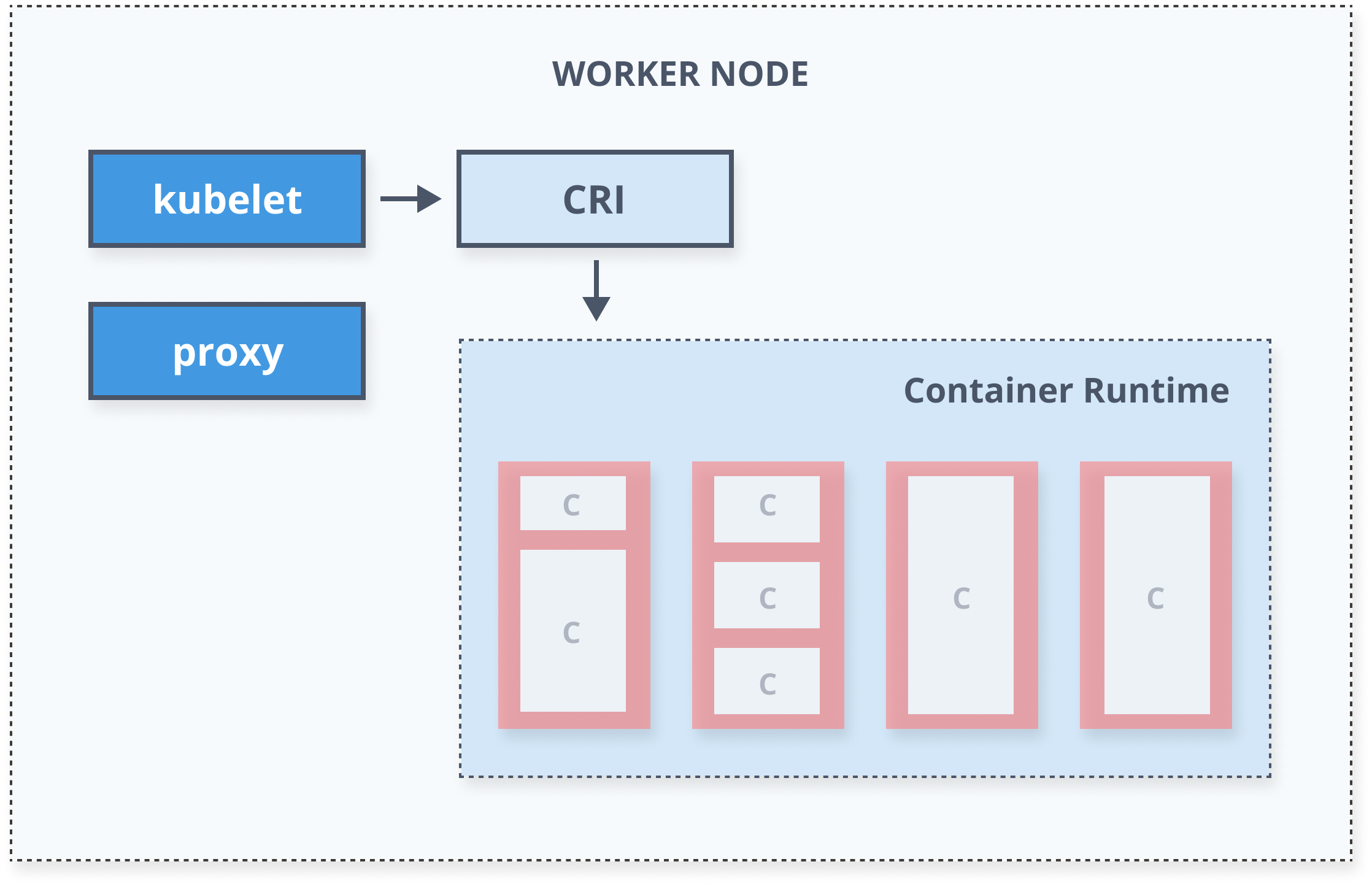

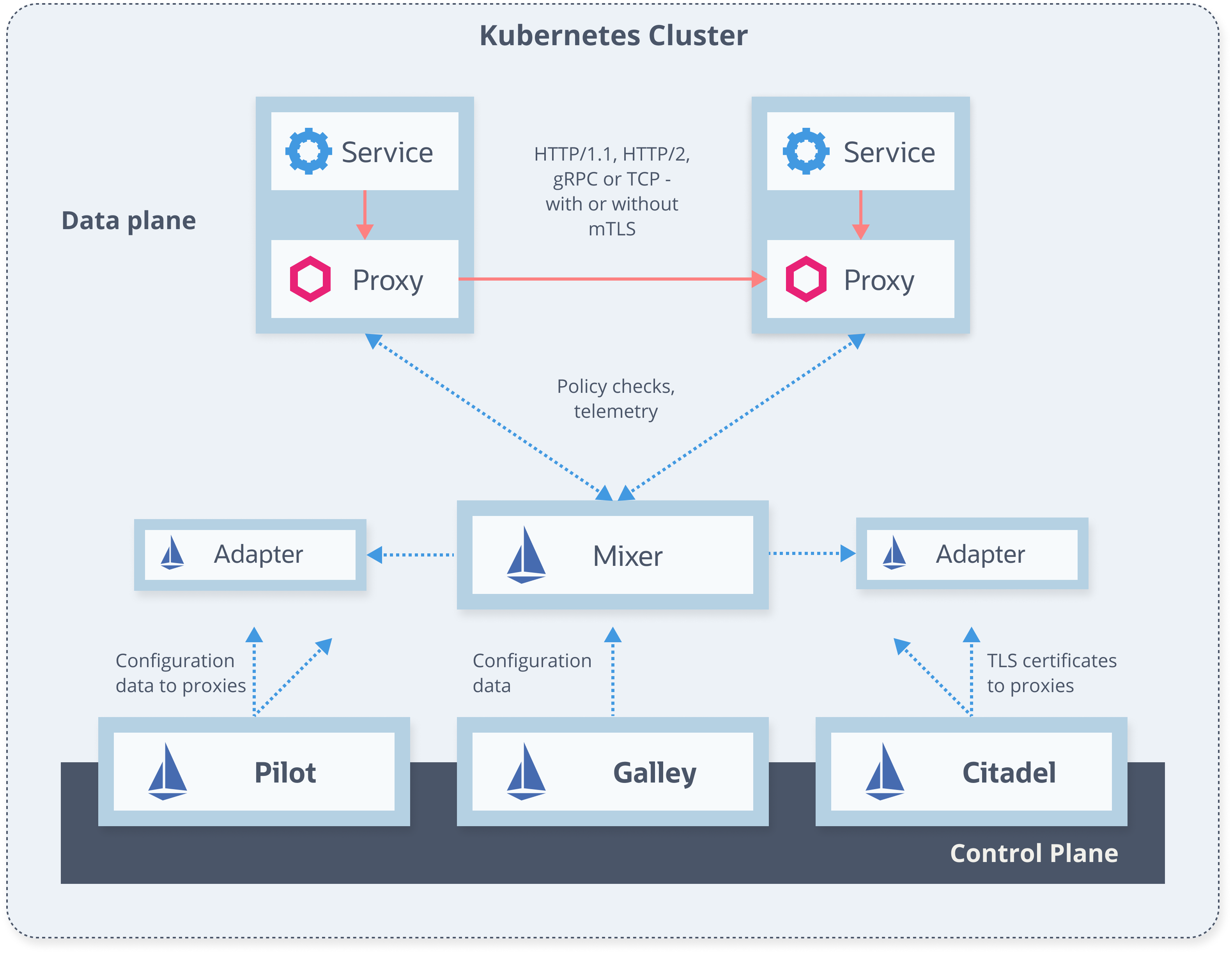

Service Mesh - Architecture

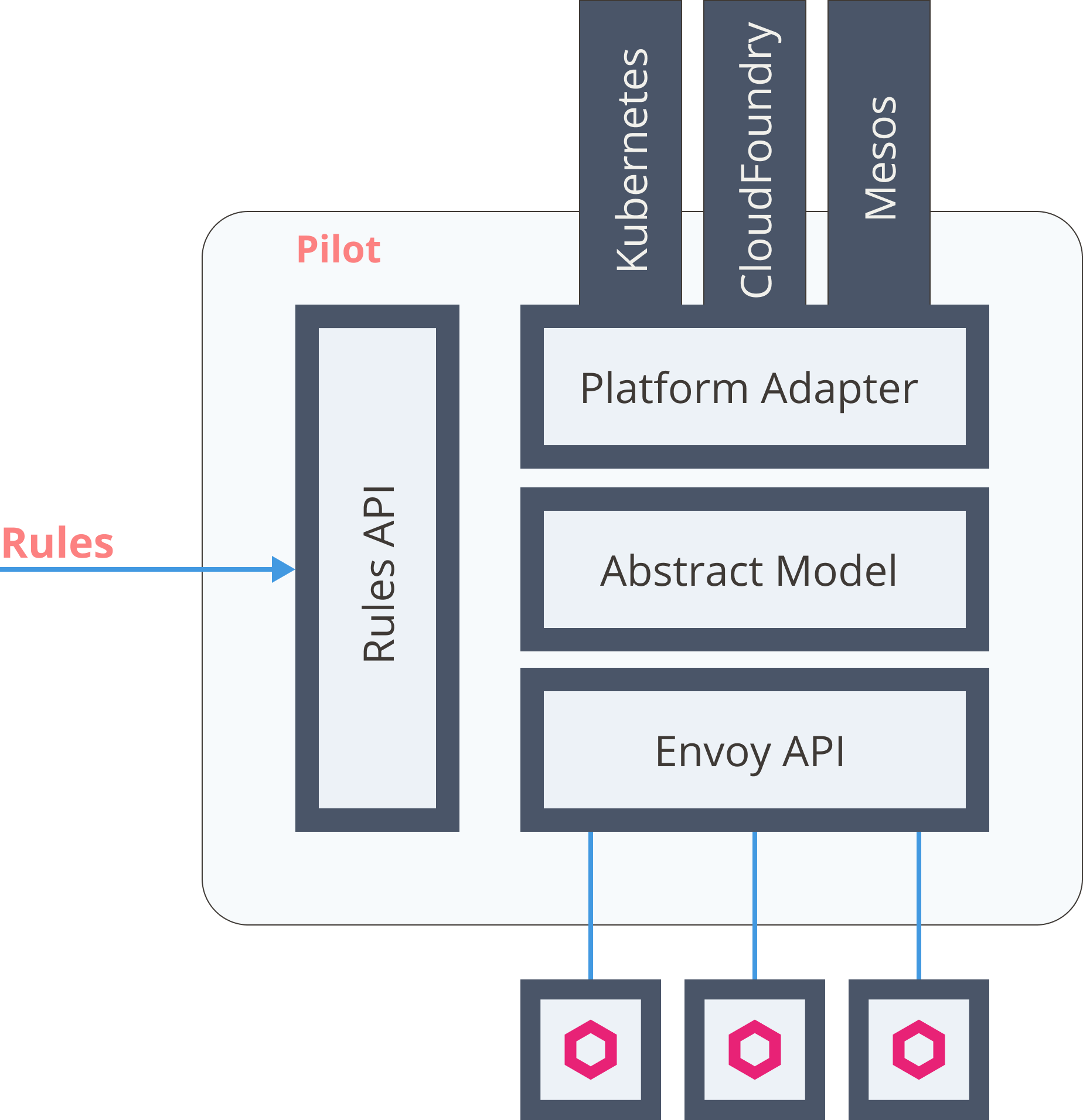

Data plane (proxies)

- Run next to each service instance (or one per host)

- Istio uses Envoy proxy

- Intercept all incoming/outgoing requests (

iptables) - Configure on how to handle traffic

- Emits metric

Control plane

- Validates rules

- Translates high-level rules to proxy configuration

- Updates the proxies/configuration

- Collects metrics from proxies

Service Mesh - Features

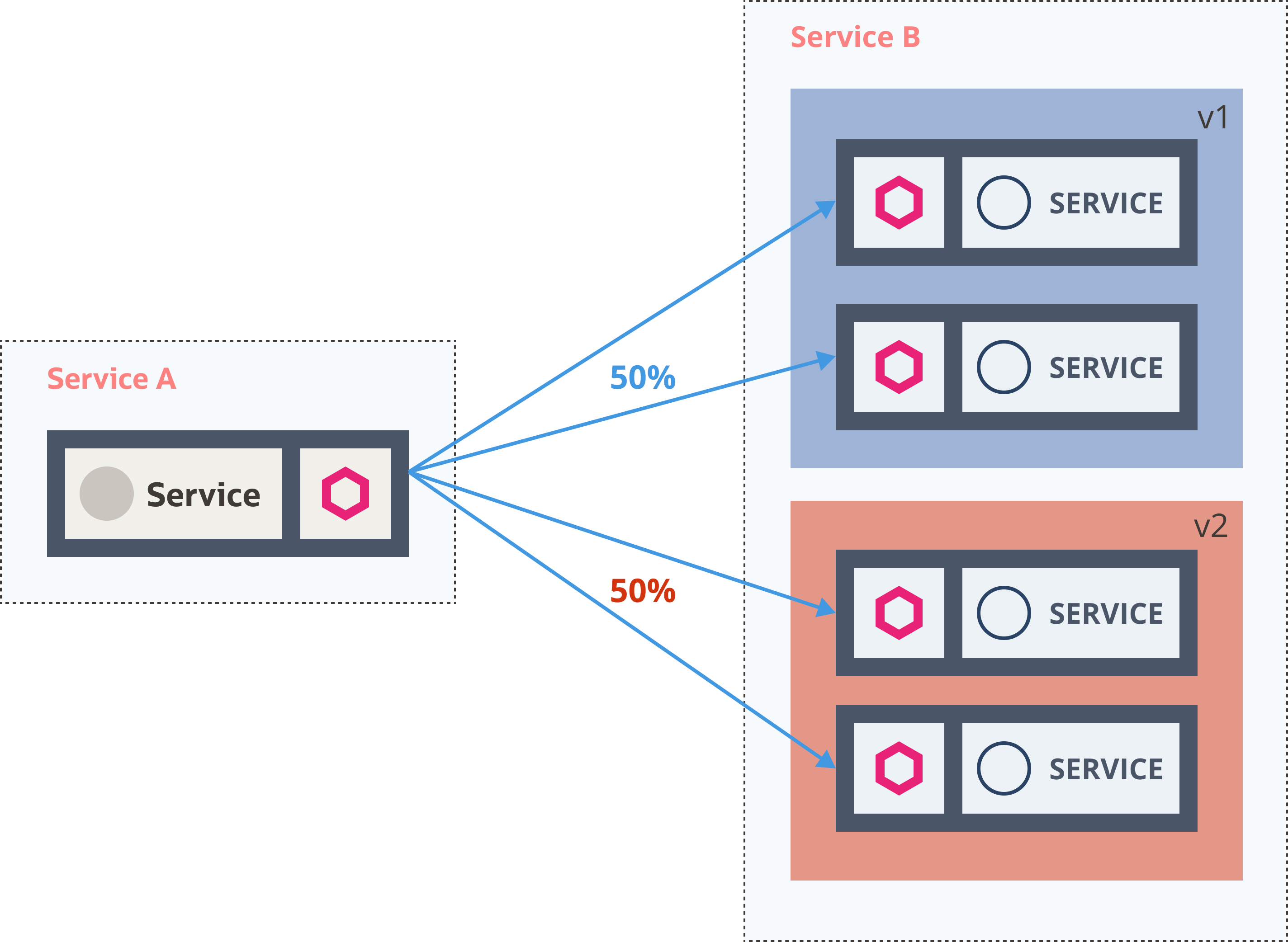

Connect

- Layer 7 routing and traffic management

- %-based traffic split (URIs, header, scheme, method, ...)

- Circuit breakers, timeouts and retries

Manage

- Telemetry (proxies collect metrics automatically)

- Visibility into service communication without code changes

Secure

- Secure communication between services (mutual TLS)

- Identity + cert for each service

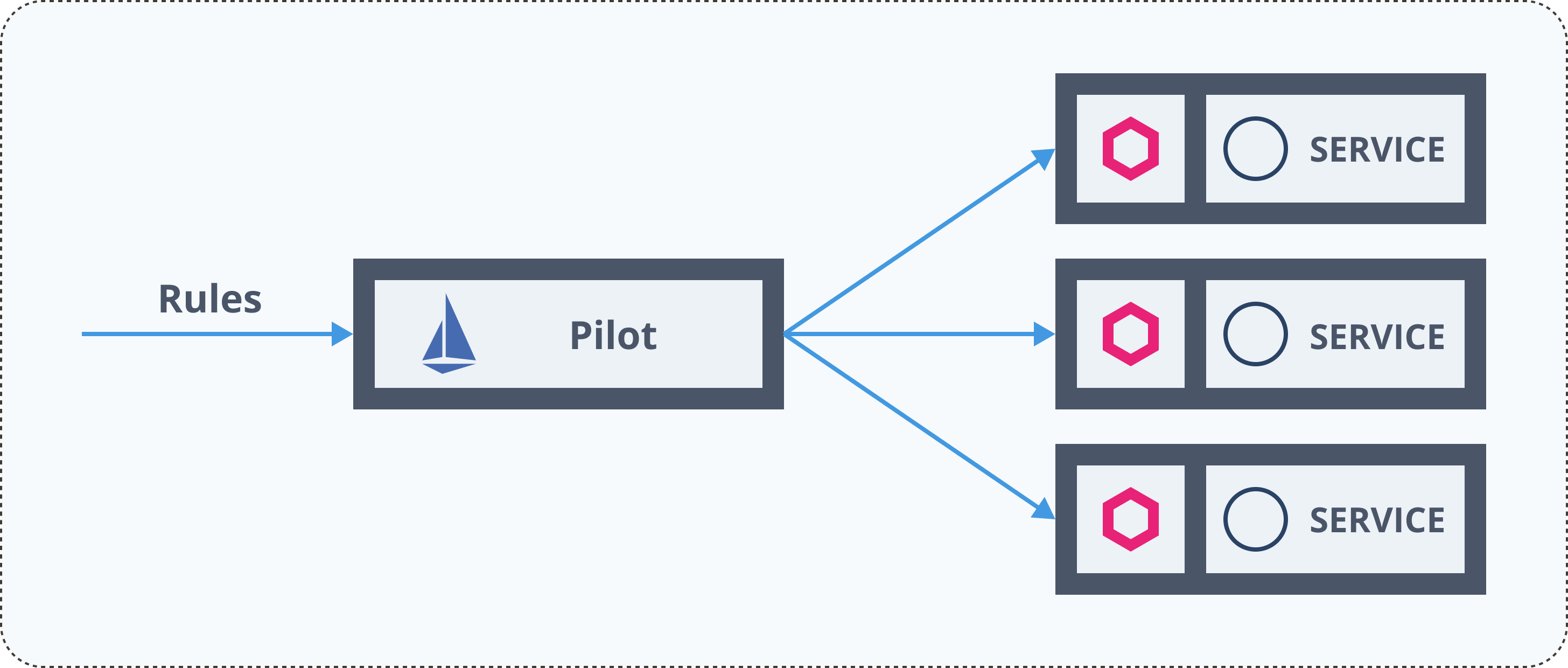

Traffic Management

Service Mesh - Istio

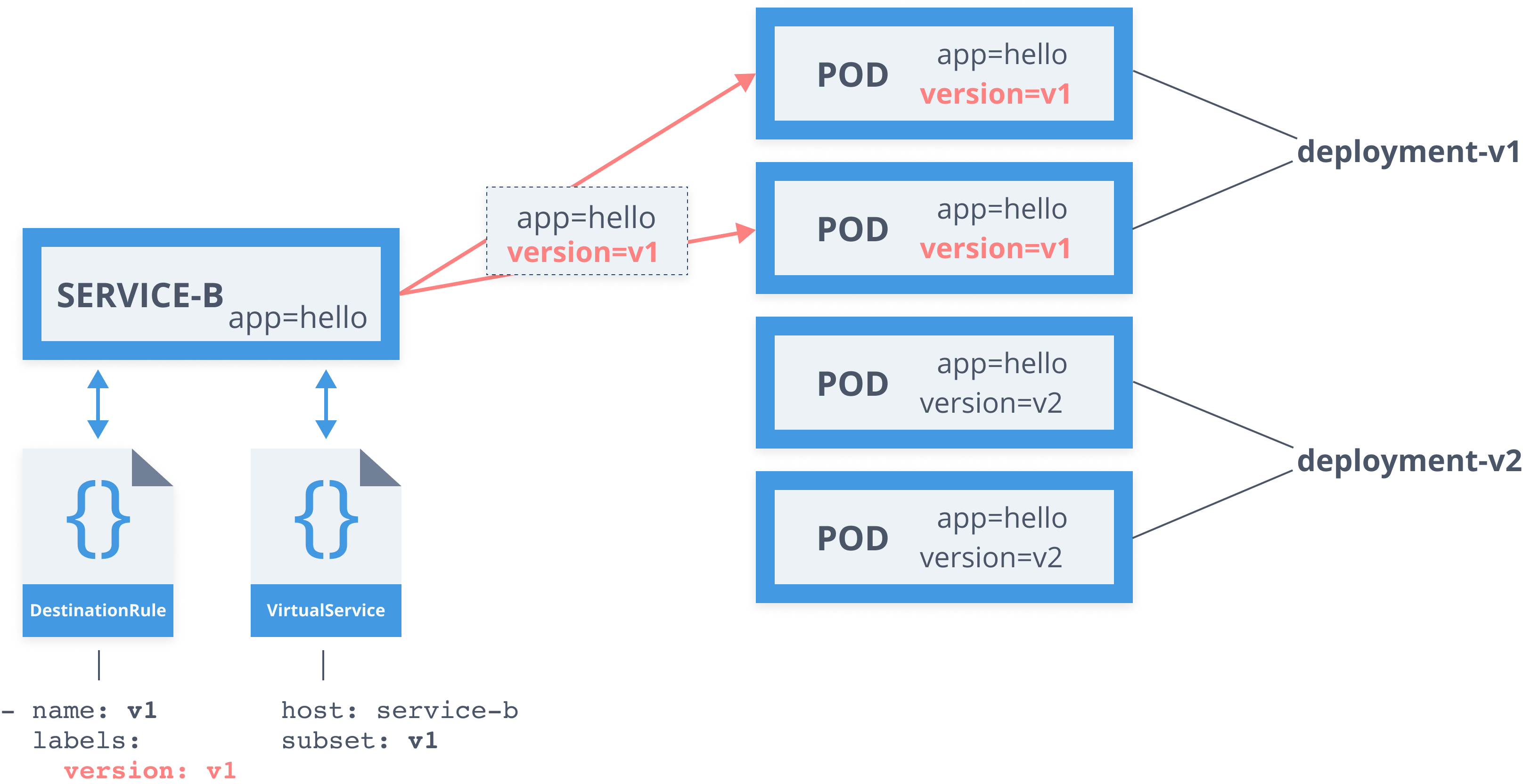

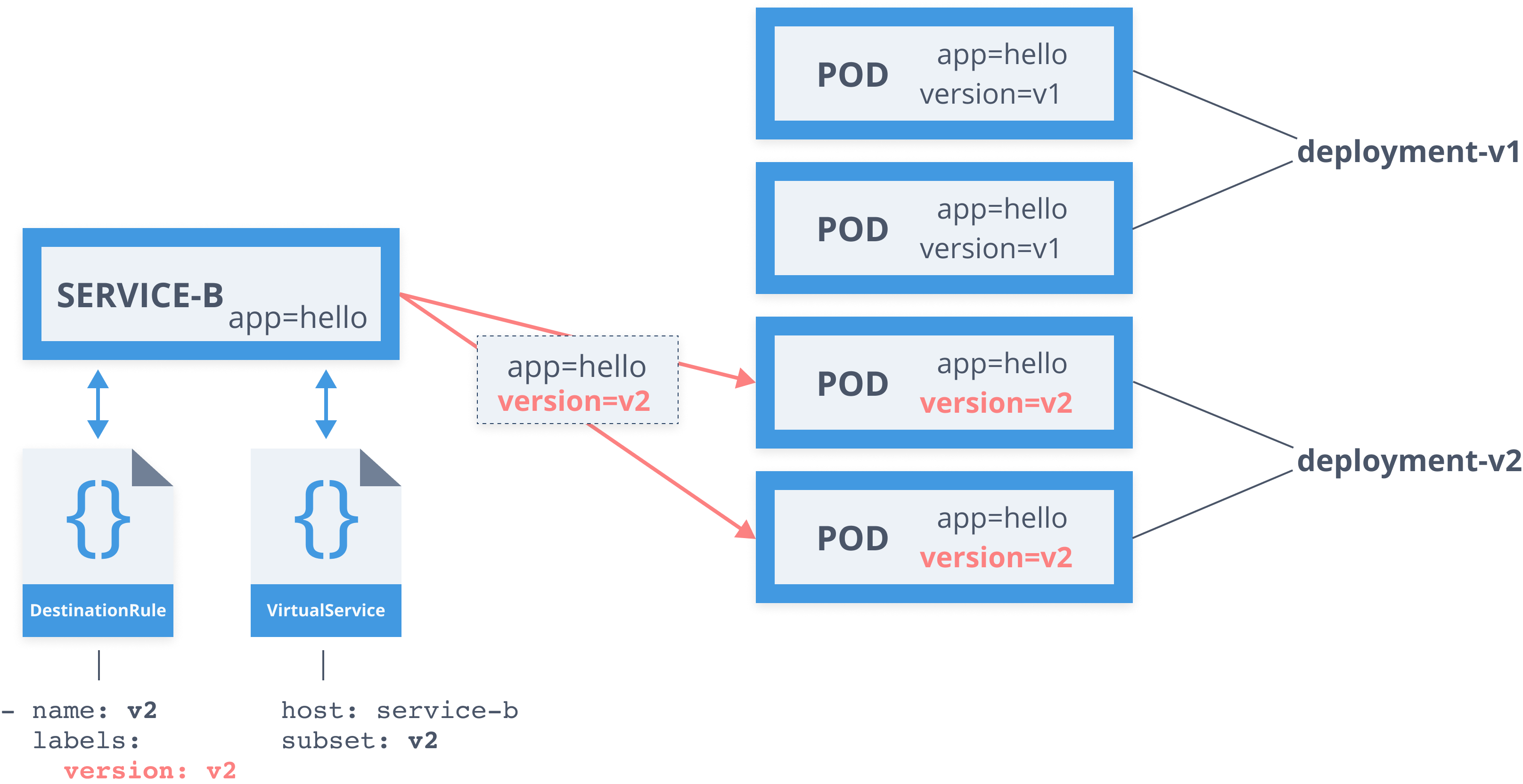

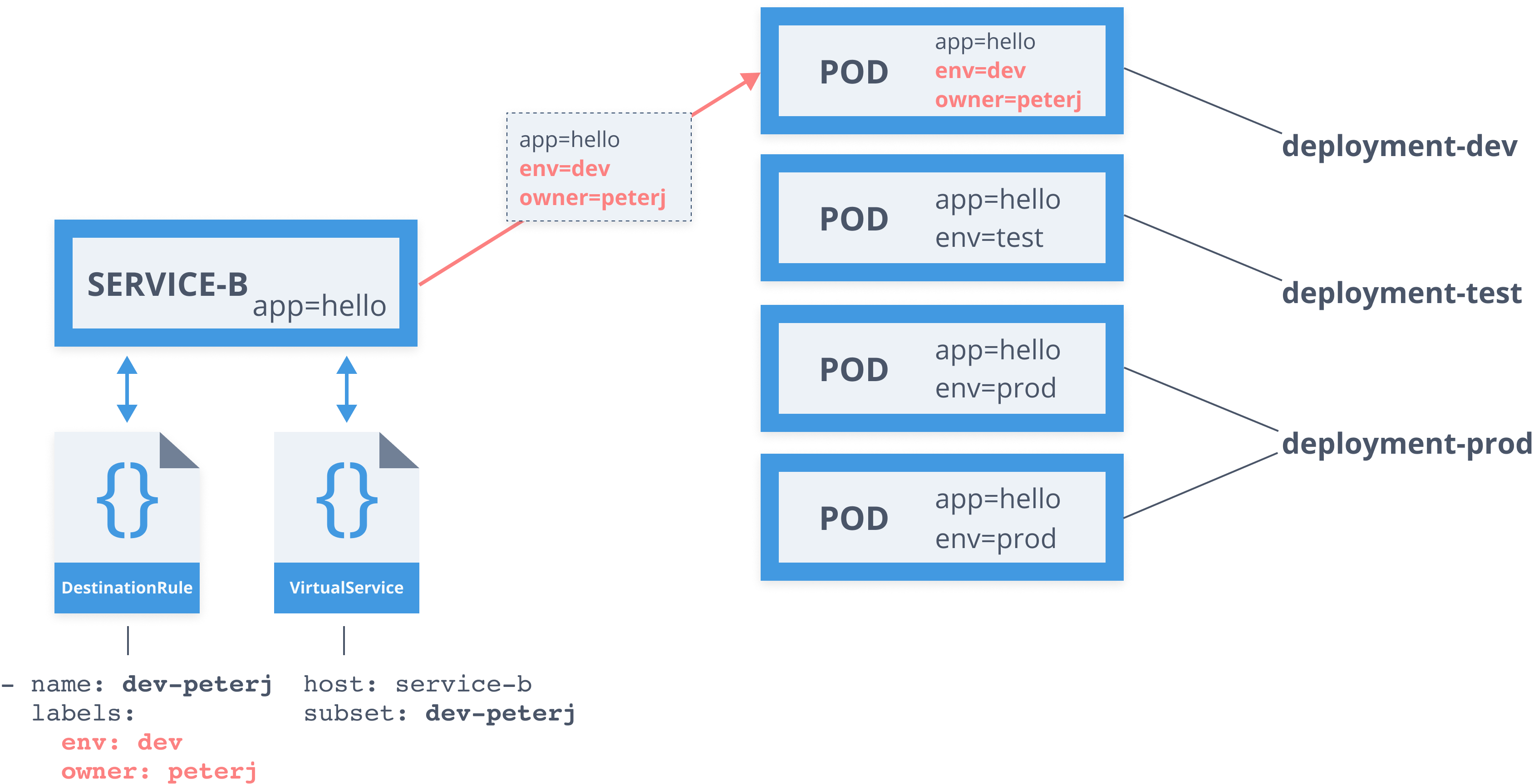

Traffic Management Resources

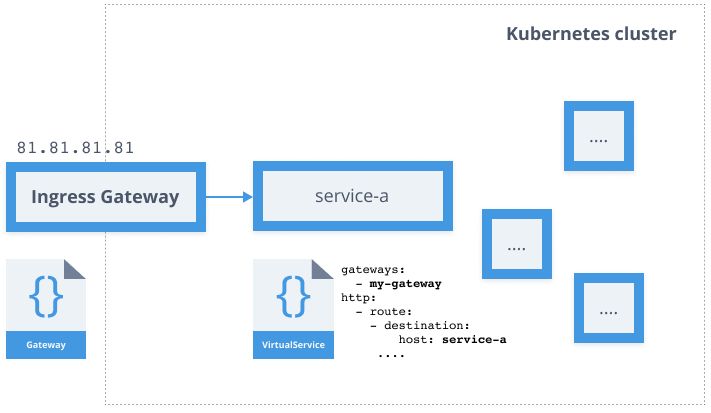

- Gateway

- VirtualService

- DestinationRule

- ServiceEntry

- Sidecar

Service Mesh - Virtual Service

apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: service-bspec: hosts: - service-b.default.svc.cluster.local http: - route: - destination: host: service-b subset: v1 weight: 98 - destination: host: service-b subset: v2 weight: 2Service Mesh - Destination Rule

apiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata: name: service-bspec: host: service-b.default.svc.cluster.local subsets: - name: v1 labels: version: v1 - name: v2 labels: version: v2 trafficPolicy: tls: mode: ISTIO_MUTUAL

Destination rule

apiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata: name: service-bspec: host: service-b.default.svc.cluster.local subsets: - name: v1 labels: version: v1 - name: v2 labels: version: v2Virtual service

... http: - route: - destination: host: service-b subset: v1 weight: 30

Service Mesh - Service Entry

apiVersion: networking.istio.io/v1alpha3kind: ServiceEntrymetadata: name: movie-dbspec: hosts: - api.themoviedb.org ports: - number: 443 name: https protocol: HTTPS resolution: DNS location: MESH_EXTERNALService Mesh - Gateway

apiVersion: networking.istio.io/v1alpha3kind: Gatewaymetadata: name: gatewayspec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "hello.example.com"

Service Mesh - Sidecar

apiVersion: networking.istio.io/v1alpha3kind: Sidecarmetadata: name: default namespace: prod-us-west-1spec: egress: - hosts: - 'prod-us-west-1/*' - 'prod-apis/*' - 'istio-system/*'Service Mesh - Traffic Management

- Define subsets in DestinationRule

- Define route rules in VirtualService

- Define one or more destinations with weights

Demo

Istio Traffic Routing

Service Resiliency

Resiliency

Ability to recover from failures and continue to function

Return the service to a fully functioning state after failure

Resiliency

High availability

- Healthy

- No significant downtime

- Responsive

- Meeting SLAs

Disaster recovery

- Design can't handle the impact of failures

- Data backup & archiving

Resiliency Strategies

- Load Balancing

- Timeouts and retries

- Circuit breakers and bulkhead pattern

- Data replication

- Graceful degradation

- Rate limiting

Service Mesh - Timeouts

apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: service-bspec: hosts: - service-b.default.svc.cluster.local http: - route: - destination: host: service-b subset: v1 timeout: 5sService Mesh - Retries

apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: service-bspec: hosts: - service-b.default.svc.cluster.local http: - route: - destination: host: service-b subset: v1 retries: attempts: 3 perTryTimeout: 3s retryOn: gateway-error,connect-failureService Mesh - Circuit Breakers

apiVersion: networking.istio.io/v1alpha3kind: DestinationRulemetadata: name: service-bspec: host: service-b.default.svc.cluster.local trafficPolicy: tcp: maxConnections: 1 http: http1MaxPendingRequests: 1 maxRequestsPerConnection: 1 outlierDetection: consecutiveErrors: 1 interval: 1s baseEjectionTime: 3m maxEjectionPercent: 100Service Mesh - Delays

apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: service-bspec: hosts: - service-b.default.svc.cluster.local http: - route: - destination: host: service-b subset: v1 fault: delay: percentage: 50 fixedDelay: 2sService Mesh - Aborts

apiVersion: networking.istio.io/v1alpha3kind: VirtualServicemetadata: name: service-bspec: hosts: - service-b.default.svc.cluster.local http: - route: - destination: host: service-b subset: v1 fault: abort: percentage: 50 httpStatus: 404Demo

Service Resiliency

Security

Access Control

Can a principal perform an action on an object?

Access Control

Can a principal perform an action on an object?

Principal = user

Action = delete

Object = file

Authentication (authn)

- Verify credential is valid/authentic

- Istio: X.509 certificates

- Identity encoded in certificate

Authorization (authz)

- Is principal allowed to perform an action on an object?

- Istio: RBAC policies

- Role-based access control

Authentication and authorization work together

Identity - SPIFFE

- SPIFFE (Secure Production Identity Framework for Everyone)

- Specially formed X.509 certificate with an ID (e.g.

spiffe://cluster.local/ns/default/sa/default) - Kubernetes: service account is used

Identity - SPIFFE

Concepts:

- Identity (URI)

spiffe://cluster.local/ns/default/sa/default

- Encoding of identity into SVID (SPIFFE Verifiable Identity Document)

- X.509 and Subject Alternate Name (SAN) field

- API for issuing and retrieving SVIDs

Key management

- Citadel

- Certificate authority (CA)

- Signs certificate requests that create X.509 certificates

- Citadel (node) agents (SDS - secret discovery service)

- Broker between Citadel and Envoy proxies

- Envoy

Mutual TLS (mTLS)

Flow

Traffic from client gets routed to the client side proxy

Client side proxy starts mTLS handshake

- Secure naming check: verify service account in the cert can run the target service

Client and server side proxies establish mTLS connection

Server side proxy forwards traffic to the server service

Istio Auth Policies

Authn policy:

- Controls how proxies communicate with one another

- mTLS on/off

Authz policy:

- Requires authn

- Configures which identities are allowed to communicate

Configuring mTLS/JWT

- Policy resource (

authentication.istio.io/v1alpha1.Policy) Scope:

- Mesh < namespace < service

Also supports JWT

Configuring authorization

Who can talk to whom

- Uses RBAC (role-based access control)

Service role

- Actions that can be performed on service by any principal with the role

Service role binding

- Assigns roles to principals (principals = service identities = ServiceAccounts)

Configuring RBAC

- ClusterRbacConfig resource (

rbac.istio.io/v1alpha1) - Multiple modes:

- On, off

- On with inclusion, on with exclusion

Istio vs. Linkerd vs. Consul Connect?

- Linkerd: Kubernetes only

- Consul: agent per-node + proxies

- Linkerd: no circut breaking*

- Consul: no failure injection

https://github.com/linkerd/linkerd2/issues/2846

How to get started?

- Do you need a service mesh?

- Start small and slow:

- Learn and understand the resources

- Apply to a subset of services

- Understand the metrics, logs, dashboards

- Repeat

Thank you

FEEDBACK

Slides: https://slides.peterj.dev/jfuture-2019

Exercises: https://github.com/peterj/jfuture

Contact